Quick Sort, a cornerstone of algorithm design, offers a powerful and efficient way to sort data. Understanding its mechanisms and optimization techniques is crucial for programmers and data scientists. This article dives deep into the world of Quick Sort, exploring its inner workings, optimization strategies, and practical applications.

Understanding Quick Sort

Quick Sort is a highly efficient sorting algorithm, renowned for its speed and effectiveness in a wide range of applications. It stands out due to its use of the divide-and-conquer strategy, a powerful problem-solving paradigm that breaks down a complex problem into smaller, more manageable subproblems. Let’s delve into the core concept of Quick Sort and understand what makes it tick.

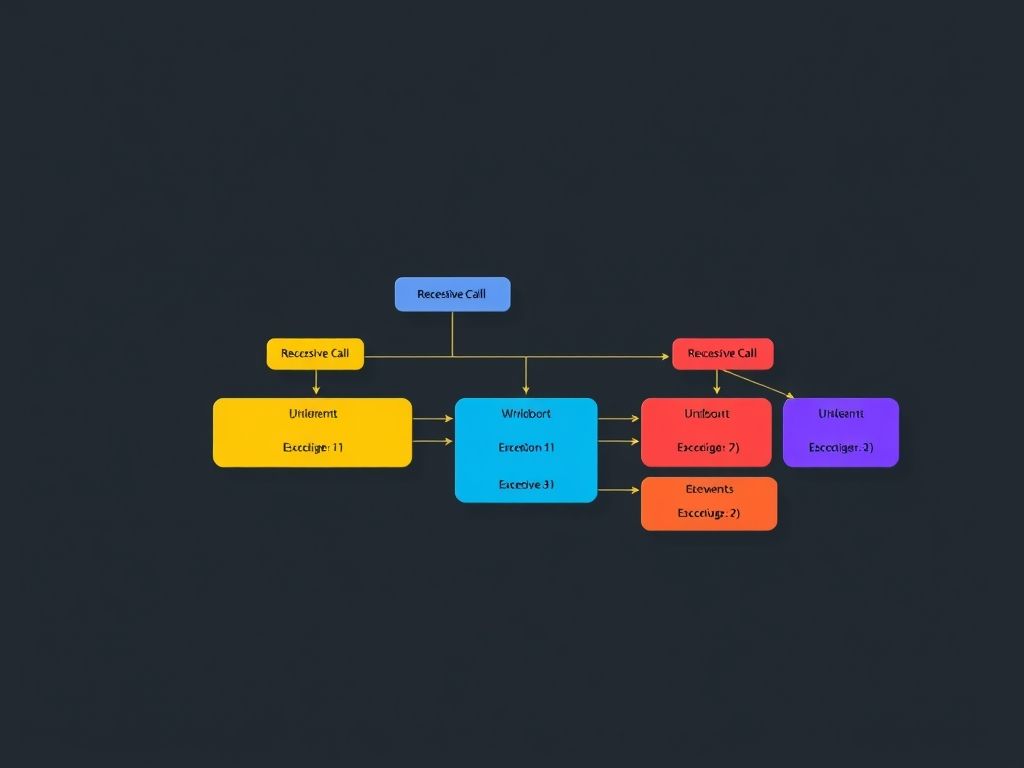

At its heart, Quick Sort operates by selecting a ‘pivot’ element from the array. The array is then partitioned into two sub-arrays: elements less than the pivot and elements greater than the pivot. The pivot element is placed in its correct sorted position. This process is then recursively applied to the sub-arrays until the entire array is sorted. This recursive nature is a key aspect of Quick Sort’s divide-and-conquer approach.

The partitioning step is crucial. It involves iterating through the array and comparing each element to the pivot. Elements smaller than the pivot are moved to the left side of the array, while elements larger than the pivot are moved to the right. This ensures that after each partitioning step, the pivot element is in its final sorted position.

The choice of the pivot element significantly impacts the algorithm’s performance. Ideally, the pivot should be the median of the array, splitting the array into two equal halves. However, finding the median is itself a complex problem. In practice, various strategies are used to select the pivot, such as choosing the first element, the last element, or a random element. Different pivot selection strategies can lead to different performance characteristics, especially in worst-case scenarios.

The average-case time complexity of Quick Sort is O(n log n), where ‘n’ is the number of elements in the array. This makes it one of the fastest sorting algorithms in practice. However, in the worst-case scenario, when the pivot is consistently chosen poorly (e.g., always the smallest or largest element), the time complexity degrades to O(n^2). This highlights the importance of choosing a good pivot selection strategy to avoid the worst-case performance. Understanding how to mitigate this worst-case scenario is crucial for effective implementation.

How does Quick Sort differ from other sorting algorithms? Unlike algorithms like Bubble Sort or Insertion Sort, which have a time complexity of O(n^2) in most cases, Quick Sort’s average-case complexity of O(n log n) makes it significantly faster for large datasets. Compared to Merge Sort, which also has a time complexity of O(n log n), Quick Sort often performs better in practice due to its lower constant factors. However, Merge Sort has a guaranteed O(n log n) time complexity, while Quick Sort can degrade to O(n^2) in the worst case.

Let’s illustrate with a simple example. Consider the array [7, 2, 1, 6, 8, 5, 3, 4]. Let’s choose the first element, 7, as the pivot.

1. Partitioning: Elements smaller than 7 are moved to the left, and elements larger than 7 are moved to the right, resulting in [2, 1, 6, 5, 3, 4, 7, 8].

2. Recursion: Quick Sort is then recursively applied to the sub-arrays [2, 1, 6, 5, 3, 4] and [8].

3. The process continues until each sub-array is sorted.

This example demonstrates the basic steps of Quick Sort. The recursive nature allows it to efficiently sort the entire array by repeatedly partitioning it into smaller sub-arrays. The efficiency of Quick sort hinges on minimizing the number of comparisons and swaps, which is directly influenced by the pivot selection.

In the context of Tối ưu thuật toán (Algorithm Optimization), Quick Sort presents numerous opportunities for improvement. Techniques like randomized pivot selection, three-way partitioning, and tail-call optimization can significantly enhance its performance, especially in scenarios with duplicate elements or large datasets. These optimizations aim to reduce the likelihood of encountering the worst-case scenario and improve the overall efficiency of the algorithm. Further, Thuật toán sắp xếp nhanh (Quick Sort algorithm) is a fundamental concept in computer science, and its understanding is crucial for developing efficient sorting solutions.

Understanding the core concept of Quick Sort is just the first step. The next step involves exploring various techniques to optimize its performance and address potential challenges.

Optimizing Quick Sort

Here’s the chapter on optimizing Quick Sort:

Optimizing Quick Sort

Following our understanding of the core principles of Quick Sort, as discussed in the previous chapter, this chapter delves into techniques for tối ưu thuật toán, specifically focusing on enhancing Quick Sort’s performance. While Quick Sort boasts an average-case time complexity of O(n log n), its worst-case scenario of O(n^2) can significantly degrade its efficiency. This chapter explores strategies to mitigate these risks and further refine the algorithm.

One of the most critical aspects of optimizing Quick Sort is selecting an appropriate pivot element. A poorly chosen pivot can lead to unbalanced partitions, effectively turning Quick Sort into a much slower algorithm. Several strategies exist to address this issue.

- Random Pivot Selection: Instead of consistently choosing the first or last element as the pivot, randomly selecting an element reduces the likelihood of encountering the worst-case scenario, especially with already sorted or nearly sorted data. This introduces a degree of randomness, making the algorithm less susceptible to predictable input patterns.

- Median-of-Three: This technique involves selecting the median value among the first, middle, and last elements of the subarray as the pivot. This approach often provides a better representation of the data’s distribution, leading to more balanced partitions. For example, if the subarray is [8, 2, 5, 1, 9, 3, 7, 4, 6], the first element is 8, the middle is 9, and the last is 6. The median of these three (6, 8, and 9) is 8, which would then be selected as the pivot.

- Median-of-Medians: This more sophisticated technique aims to find a pivot that is guaranteed to be closer to the true median of the data. While it adds complexity to the pivot selection process, it can provide significant performance gains, especially for larger datasets.

Another optimization technique involves handling small subarrays differently. Quick Sort’s overhead can become significant for small subarrays due to the recursive calls.

- Insertion Sort for Small Subarrays: When the subarray size falls below a certain threshold (typically between 10 and 20 elements), switching to a simpler algorithm like Insertion Sort can be more efficient. Insertion Sort has a lower overhead for small datasets and performs well on nearly sorted data, which is often the case for subarrays after several Quick Sort partitions. This hybrid approach combines the strengths of both algorithms.

Addressing recursion depth is also crucial. Deep recursion can lead to stack overflow errors, especially with large datasets.

- Tail Recursion Optimization: While not directly applicable in all programming languages (e.g., languages without tail call optimization), recognizing and potentially transforming Quick Sort to utilize tail recursion could mitigate stack overflow issues in environments where it’s supported.

- Iterative Quick Sort: Converting the recursive Quick Sort implementation into an iterative one using a stack data structure can eliminate the risk of stack overflow. This approach manually manages the subarrays to be processed, providing greater control over memory usage.

Understanding and effectively implementing these optimization techniques is essential for maximizing the efficiency of Quick sort. These strategies help in handling worst-case scenarios, choosing pivot elements effectively, and managing recursion depth, ultimately leading to a more robust and performant sorting algorithm.

Furthermore, understanding thuật toán sắp xếp nhanh in different contexts allows for adaptation to specific data characteristics. For instance, if the data is known to have many duplicate elements, a three-way partitioning scheme (elements less than, equal to, and greater than the pivot) can significantly improve performance.

These optimizations are not mutually exclusive; they can be combined to achieve even greater performance gains. The specific combination that yields the best results depends on the characteristics of the data being sorted and the computational environment.

In the next chapter, we will explore real-world applications of Quick Sort, emphasizing its importance in various domains. We will highlight the advantages of using Quick Sort over other sorting algorithms in these contexts and see how these optimizations play a critical role in those applications.

Real-World Applications of Quick Sort

Quick Sort, known for its efficiency, finds extensive application across various domains. Its speed and relatively low overhead make it a preferred choice in scenarios where performance is critical. Building upon the strategies for *optimizing Quick Sort* discussed in the previous chapter – including pivot selection and handling worst-case scenarios – we can now explore how these optimized versions are deployed in practice.

One of the most significant areas where Quick Sort shines is in database management. Databases frequently need to sort large volumes of data for indexing, querying, and reporting. Quick Sort’s average-case time complexity of O(n log n) makes it highly suitable for these tasks. For instance, when retrieving records based on a specific field, the database might use Quick Sort to quickly arrange the data, enabling efficient searching. Furthermore, optimized versions of Quick Sort, incorporating techniques to mitigate worst-case performance, are often preferred to ensure consistent performance even with skewed data. The *optimization of Quick Sort* is paramount in this context, as even minor improvements can lead to substantial gains in query response times, especially in high-traffic databases.

Another critical application is in large-scale data processing. Big data frameworks, such as Apache Spark and Hadoop, often rely on sorting algorithms as part of their data transformation pipelines. Quick Sort’s ability to handle large datasets efficiently makes it a valuable component in these systems. Consider a scenario where you need to analyze website traffic data to identify popular pages. The data might be sorted by page views using Quick Sort as a preliminary step before further aggregation and analysis. Here, the speed of Quick sort is crucial in minimizing processing time and enabling timely insights.

Furthermore, Quick Sort is a fundamental algorithm used in many software libraries. Standard Template Library (STL) in C++ and similar libraries in other languages often include optimized implementations of Quick Sort for general-purpose sorting needs. These implementations are carefully crafted to provide robust performance across a wide range of input data. For example, the `std::sort` function in C++ typically uses a hybrid approach that combines Quick Sort with other algorithms like Insertion Sort to handle small subarrays more efficiently. This hybrid approach leverages the strengths of different algorithms to achieve optimal overall performance. The concept of thuật toán sắp xếp nhanh (Quick sort algorithm) is deeply embedded in these libraries.

The advantages of using Quick Sort over other sorting algorithms, such as Bubble Sort or Insertion Sort, become particularly evident in these contexts. While Bubble Sort and Insertion Sort have simpler implementations, their O(n^2) time complexity makes them impractical for large datasets. Merge Sort, another popular sorting algorithm with O(n log n) time complexity, requires additional memory for merging sorted subarrays. Quick Sort, on the other hand, typically operates in-place, minimizing memory usage. This in-place characteristic is a significant advantage in memory-constrained environments or when dealing with extremely large datasets.

However, it’s important to acknowledge Quick Sort’s potential drawbacks. As discussed previously, its worst-case time complexity is O(n^2), which can occur when the pivot element is consistently poorly chosen. This can be mitigated through techniques like randomized pivot selection or using the median-of-three approach. These methods help to ensure that the pivot is more likely to be close to the median of the data, thereby balancing the partitions and avoiding worst-case scenarios.

In summary, Quick Sort’s speed and efficiency make it a valuable tool in various real-world applications, including database management, large-scale data processing, and software libraries. Its ability to operate in-place and its average-case time complexity of O(n log n) provide significant advantages over other sorting algorithms. While it’s essential to be aware of its potential worst-case performance, the techniques discussed in the previous chapter provide effective strategies for mitigating this risk and ensuring robust performance. Understanding how to tối ưu thuật toán (optimize algorithms) like Quick Sort is crucial for building efficient and scalable systems.

The next chapter will delve into the comparative analysis of Quick Sort with other popular sorting algorithms.

Conclusions

Quick Sort stands as a versatile and efficient sorting algorithm. By understanding its core principles and implementing optimization strategies, you can significantly improve your code’s efficiency and effectiveness when dealing with large datasets. This article provides a comprehensive guide to mastering Quick Sort.