Dive into the world of data analysis with K-means clustering, a powerful technique for grouping similar data points. This guide will provide a comprehensive understanding of this algorithm and its applications, empowering you to leverage its potential for insightful discoveries.

Understanding K-Means Clustering

At its heart, K-means clustering is a simple yet powerful unsupervised learning algorithm used to group data points into distinct clusters. The goal is to partition *n* observations into *k* clusters, where each observation belongs to the cluster with the nearest mean (centroid), serving as a prototype of the cluster. This chapter will delve into the fundamental concepts behind this widely used technique.

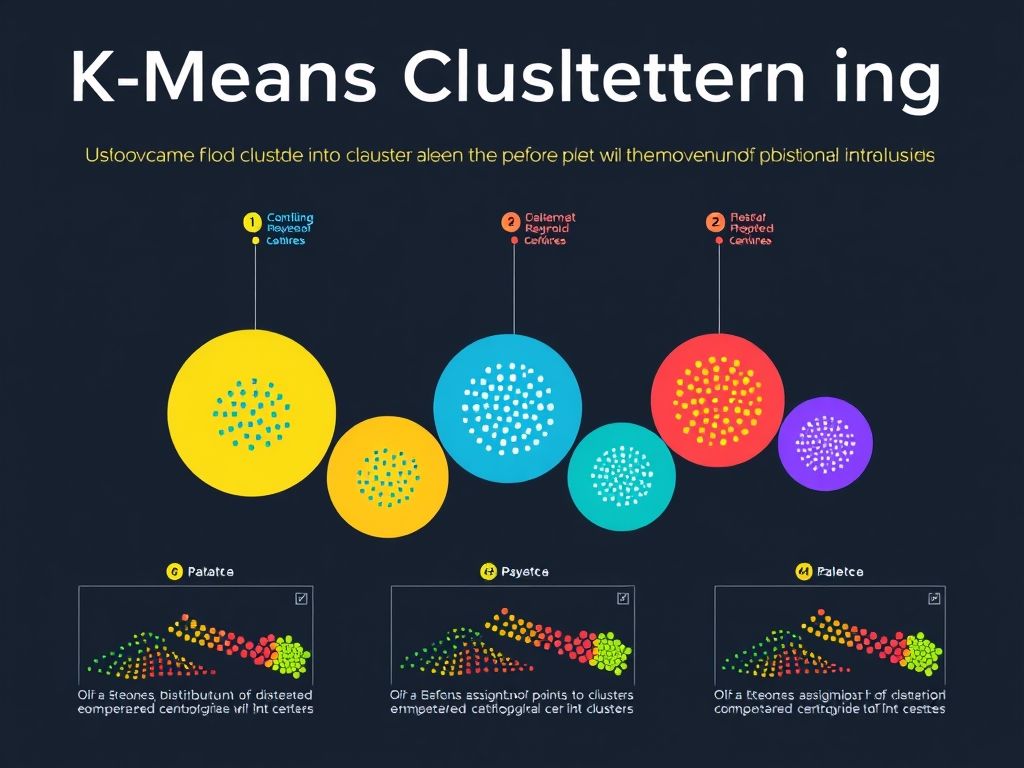

The K-means algorithm operates through an iterative process, striving to minimize the within-cluster variance. The algorithm can be broken down into the following steps:

- Initialization: The first step involves selecting *k* initial centroids. These can be chosen randomly from the dataset or using more sophisticated methods. The choice of initial centroids can significantly impact the final clustering result.

- Assignment: Each data point is then assigned to the nearest centroid based on a chosen distance metric. The most common distance metric is Euclidean distance, which calculates the straight-line distance between two points. Other distance metrics, such as Manhattan distance or cosine similarity, can also be used depending on the nature of the data.

- Update: Once all data points have been assigned to clusters, the centroids are recalculated. The new centroid for each cluster is the mean of all the data points assigned to that cluster.

- Iteration: Steps 2 and 3 are repeated until the cluster assignments no longer change significantly or a predefined number of iterations is reached. This iterative process aims to converge on a stable clustering solution.

The choice of *k*, the number of clusters, is a crucial parameter in K-means clustering. Selecting an appropriate value for *k* is often challenging and may require experimentation and domain knowledge. Methods like the elbow method or silhouette analysis can be used to help determine the optimal number of clusters.

To further illustrate the concept, consider an analogy: Imagine you have a bag of colorful marbles scattered on a table. You want to group these marbles into distinct piles based on their color. You start by randomly placing a few empty bowls on the table (representing the initial centroids). Then, you pick up each marble and place it in the bowl that contains marbles of the most similar color. After all marbles are placed, you adjust the position of each bowl to be in the “center” of the marbles it contains. You repeat this process of assigning marbles to bowls and adjusting the bowl positions until the marbles are neatly grouped, and the bowl positions no longer change significantly. This is essentially what K-means clustering does with data points.

The role of distance metrics is central to the K-means algorithm. As mentioned earlier, Euclidean distance is a common choice, but other metrics can be more appropriate depending on the data. For example, if dealing with text data, cosine similarity might be a better option as it measures the angle between two vectors, capturing the similarity in direction rather than magnitude. The choice of distance metric directly influences how the algorithm measures the “nearness” of data points and, consequently, the resulting clusters.

The process of tìm kiếm k (searching for k) is often an exploratory one. Different values of *k* are tested, and the resulting clustering solutions are evaluated based on various criteria. These criteria might include the within-cluster sum of squares (WCSS), which measures the compactness of the clusters, or the silhouette score, which measures the separation between clusters.

Thuật toán chia nhóm (clustering algorithms) like K-means are valuable tools for data analysis, but it’s essential to understand their limitations. K-means assumes that clusters are spherical and equally sized, which may not always be the case in real-world datasets. Additionally, K-means is sensitive to outliers, which can distort the cluster centroids and affect the clustering results. Despite these limitations, K-means remains a widely used and effective clustering algorithm, particularly for large datasets.

Understanding these fundamental concepts provides a solid foundation for applying K-means clustering in various data analysis tasks. In the next chapter, we will explore real-world applications of K-means.

Practical Applications of K-Means

Having explored the fundamental concepts of K-means clustering in the previous chapter, “Understanding K-Means Clustering,” where we discussed its algorithm, iterative process, and the role of distance metrics, it’s time to delve into the real-world applications of this powerful technique. K-means, a core thuật toán chia nhóm (clustering algorithm), finds use across various domains due to its simplicity and efficiency. Let’s examine some specific examples and highlight the advantages of using K-means in each scenario.

Customer Segmentation

One of the most common and impactful applications of K-means is in customer segmentation. Businesses can leverage K-means to group customers based on various attributes such as purchase history, demographics, website activity, and survey responses. For instance, a retail company might use K-means to identify distinct customer segments like “high-value customers,” “price-sensitive customers,” and “occasional buyers.” Once these segments are identified, the company can tailor its marketing strategies, product offerings, and customer service approaches to better meet the needs of each group. This targeted approach leads to increased customer satisfaction, improved retention rates, and ultimately, higher revenue. The advantages of using K-means in this context include its ability to handle large datasets and its relatively low computational cost, making it feasible for businesses with extensive customer bases.

Image Recognition

K-means also plays a significant role in image recognition and computer vision. In this context, each pixel in an image can be treated as a data point, and K-means can be used to cluster these pixels based on their color values. This allows for image segmentation, where different regions of the image are identified and separated. For example, in medical imaging, K-means can be used to segment different tissues or organs in an MRI scan, aiding in diagnosis and treatment planning. Similarly, in satellite imagery, K-means can be used to identify different land cover types, such as forests, water bodies, and urban areas. The algorithm’s ability to quickly process large amounts of data makes it ideal for these applications. Furthermore, the iterative nature of tìm kiếm k (finding k) optimal clusters helps in refining the image segmentation process.

Document Categorization

Another valuable application of K-means is in document categorization. With the explosion of online information, the ability to automatically categorize documents has become essential. K-means can be used to group documents based on their content, allowing for efficient organization and retrieval of information. For example, a news aggregator might use K-means to group news articles into different categories such as politics, sports, and business. This makes it easier for users to find the information they are interested in. Similarly, a library could use K-means to categorize books based on their subject matter. The advantage of using K-means in document categorization is its ability to handle high-dimensional data, as each document can be represented as a vector of word frequencies.

Other Applications

Beyond these specific examples, K-means finds applications in various other fields, including:

* Anomaly Detection: Identifying unusual data points that deviate significantly from the rest of the data.

* Genetics: Clustering genes based on their expression patterns to identify genes involved in specific biological processes.

* Finance: Segmenting stocks based on their historical performance to identify investment opportunities.

The versatility of K-means stems from its ability to handle a wide range of data types and its relatively simple implementation. However, it’s important to note that K-means also has its limitations. One key challenge is choosing the optimal number of clusters (k), which can significantly impact the results.

In the next chapter, “Optimizing K-Means for Effective Results,” we will delve into methods for choosing the optimal number of clusters and techniques for handling different data types. We will also explore how to evaluate the performance of K-means clustering and address potential challenges.

Here’s the chapter “Optimizing K-Means for Effective Results” for the “K-Means Clustering Mastery” article:

Optimizing K-Means for Effective Results

Having explored the practical applications of K-means in diverse fields like customer segmentation, image recognition, and document categorization, as discussed in the previous chapter, it’s now crucial to delve into optimizing the algorithm for achieving the most effective results. This involves carefully selecting the optimal number of clusters (*k*), handling various data types, evaluating performance, and addressing potential challenges.

One of the most critical aspects of K-means clustering is determining the appropriate number of clusters, *k*. Choosing the wrong *k* can lead to misleading or unhelpful results. Several methods exist to help determine the optimal *k*:

- The Elbow Method: This method involves plotting the within-cluster sum of squares (WCSS) against different values of *k*. WCSS measures the compactness of the clusters; lower values indicate tighter clusters. The plot typically resembles an arm, and the “elbow point” – the point where the rate of decrease in WCSS sharply diminishes – is often considered a good estimate for *k*. It signifies the point where adding more clusters yields diminishing returns in terms of cluster compactness.

- The Silhouette Score: The silhouette score measures how similar an object is to its own cluster compared to other clusters. It ranges from -1 to 1, where a high value indicates that the object is well-matched to its own cluster and poorly matched to neighboring clusters. By calculating the average silhouette score for different values of *k*, you can identify the *k* that maximizes this score, suggesting a good separation between clusters.

- The Gap Statistic: This method compares the WCSS of the clustered data to the expected WCSS of a random data distribution. The optimal *k* is the value where the gap between the observed and expected WCSS is the largest. This indicates that the clustering structure is significantly different from what would be expected by chance.

Beyond choosing *k*, handling different data types is also essential. K-means, in its basic form, works best with numerical data. However, real-world datasets often contain categorical or mixed data types.

- Numerical Data: Typically, numerical data is standardized or normalized before applying K-means. Standardization (scaling to have a mean of 0 and a standard deviation of 1) and normalization (scaling to a range between 0 and 1) prevent variables with larger scales from dominating the distance calculations.

- Categorical Data: For categorical data, one-hot encoding is a common technique to convert categorical variables into a numerical format. However, the increased dimensionality can be a challenge. Alternatives include using distance metrics specifically designed for categorical data, such as the Hamming distance, or employing thuật toán chia nhóm specifically designed for categorical data.

- Mixed Data: When dealing with mixed data types, Gower’s distance is often used. Gower’s distance can handle both numerical and categorical variables and provides a measure of dissimilarity between data points.

Evaluating the performance of K-means clustering is crucial to ensure the quality of the results. While the silhouette score, as mentioned earlier, can be used for model selection, other metrics can provide further insights. The Davies-Bouldin index measures the average similarity ratio of each cluster with its most similar cluster. A lower Davies-Bouldin index indicates better clustering.

Despite its widespread use, K-means faces several potential challenges:

- Sensitivity to Initial Centroids: The initial placement of cluster centroids can significantly impact the final clustering results. To mitigate this, K-means is often run multiple times with different random initializations, and the solution with the lowest WCSS is selected. The tìm kiếm k optimal centroids is an iterative process.

- Assumption of Spherical Clusters: K-means assumes that clusters are spherical and equally sized. This assumption can lead to poor results when dealing with non-spherical or irregularly shaped clusters.

- Scalability Issues: While K-means is relatively efficient, its performance can degrade with very large datasets. Techniques like mini-batch K-means can be used to improve scalability by processing data in smaller batches.

Addressing these challenges and carefully considering the methods for choosing *k* and handling data types are essential for unlocking the full potential of K-means clustering and obtaining meaningful and actionable insights from your data.

The next chapter will explore advanced techniques and variations of K-means to address some of these limitations and further enhance its applicability in diverse scenarios.

Conclusions

Mastering K-means clustering empowers you to uncover hidden patterns within data. By understanding its core principles and practical applications, you can unlock valuable insights for informed decision-making in various domains.