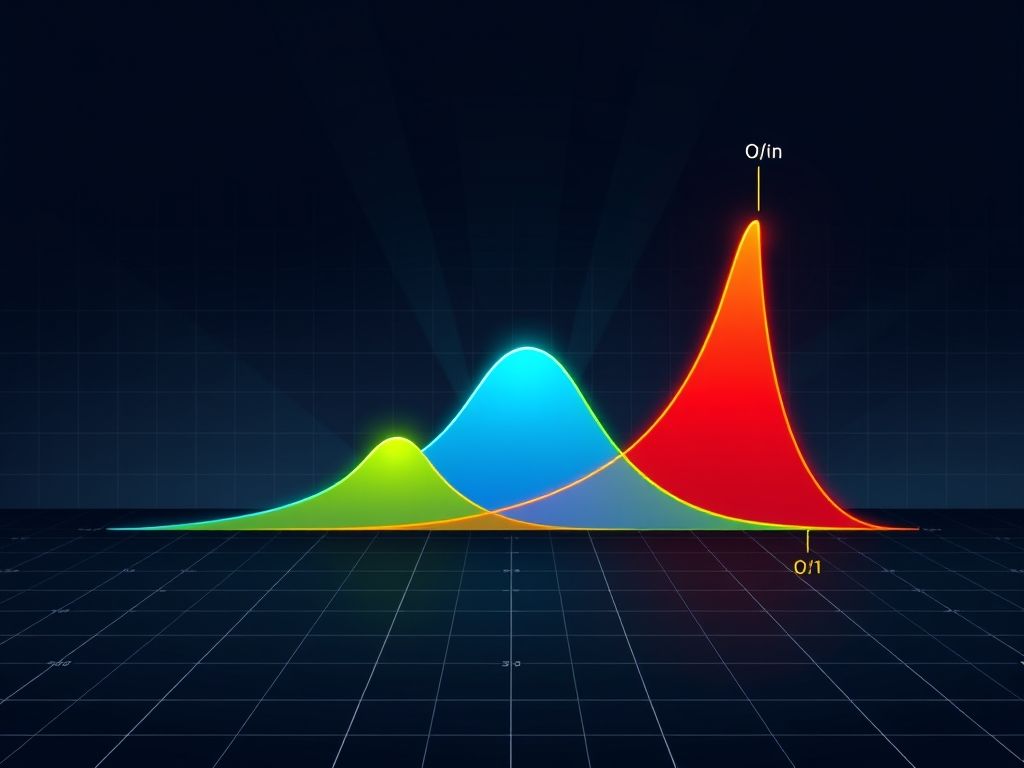

In the world of programming, efficiency is paramount. Algorithms, the step-by-step procedures for solving problems, can vary significantly in their speed and resource consumption. Big O notation provides a standardized way to analyze an algorithm’s performance as the input size grows, enabling developers to choose the most efficient solution for their needs.

Decoding Big O Notation

Big O notation is a cornerstone of computer science, especially when it comes to *algorithm efficiency analysis*. It’s a way to classify algorithms according to how their runtime or space requirements grow as the input size grows. Think of it as a shorthand for describing the **performance** characteristics of an algorithm. It doesn’t give you the exact time an algorithm will take to run, but rather provides a high-level understanding of how its execution time scales. This is vital for choosing the right algorithm for a particular task, especially when dealing with large datasets.

The primary purpose of Big O is to provide an upper bound on the growth rate of an algorithm’s resource usage (typically time or space). It allows us to compare different algorithms and predict how they will perform as the input size increases. This is a critical aspect of **thuật toán phân tích thời gian** (algorithm time analysis).

Here’s a breakdown of some common Big O notations:

* O(1) – Constant Time: This represents the best-case scenario. An algorithm with O(1) complexity takes the same amount of time to execute, regardless of the input size. A classic example is accessing an element in an array by its index. No matter how large the array is, accessing `array[5]` takes the same amount of time.

* O(log n) – Logarithmic Time: Algorithms with logarithmic time complexity have their execution time increase proportionally to the logarithm of the input size. This is often seen in divide-and-conquer algorithms. A prime example is binary search. If you have a sorted array of 1024 elements, binary search will find a specific element in a maximum of 10 comparisons (log2 1024 = 10). Doubling the array size only increases the number of comparisons by one.

* O(n) – Linear Time: In linear time, the execution time grows directly proportionally to the input size. A simple example is iterating through an array to find a specific element. If the array has 10 elements, it might take 10 steps in the worst case. If it has 100 elements, it might take 100 steps. The time scales linearly with the input.

* O(n log n) – Linearithmic Time: This complexity is often found in efficient sorting algorithms like merge sort and quicksort (on average). It’s faster than O(n^2) but slower than O(n). The `n log n` factor arises from dividing the problem into smaller subproblems (the `log n` part) and then processing each subproblem (the `n` part).

* O(n2) – Quadratic Time: Algorithms with quadratic time complexity have their execution time increase proportionally to the square of the input size. Nested loops are a common cause of this. For instance, comparing every element in an array to every other element (e.g., a naive sorting algorithm) would result in O(n2) complexity. If the array has 10 elements, it might take 100 steps. If it has 100 elements, it might take 10,000 steps.

* O(2n) – Exponential Time: Exponential time complexity indicates that the execution time doubles with each addition to the input dataset. These algorithms are generally impractical for large inputs. A classic example is finding all possible subsets of a set. As the size of the set increases, the number of subsets grows exponentially.

Understanding these notations is crucial for **đánh giá hiệu suất** (performance evaluation) of algorithms. While Big O notation simplifies the analysis by focusing on the dominant term, it provides valuable insights into how an algorithm will behave with increasing data. Choosing an algorithm with a lower Big O complexity can significantly improve the performance of your application, especially when dealing with large datasets.

In the next chapter, we will delve into practical techniques for analyzing the time complexity of algorithms, focusing on identifying dominant operations and their impact on overall performance. We’ll also highlight the importance of considering different input sizes and how Big O notation simplifies this process.

Analyzing Algorithm Performance

Following our introduction to Big O notation in the previous chapter, “Decoding Big O Notation,” where we discussed common complexities like O(1), O(log n), O(n), O(n log n), O(n^2), and O(2^n), we now delve into practical techniques for analyzing the time complexity of algorithms. Understanding how to analyze algorithm performance is crucial for writing efficient code, especially when dealing with large datasets.

The core of thuật toán phân tích thời gian (algorithm time analysis) lies in identifying the *dominant operations* within an algorithm. A dominant operation is the one that contributes the most significantly to the algorithm’s overall execution time as the input size grows. For example, in a sorting algorithm, the comparison operation might be the dominant operation. Similarly, in a search algorithm, accessing an element in an array could be the dominant operation.

To effectively analyze an algorithm’s time complexity, consider the following steps:

- Identify the input size: Determine what constitutes the “n” in the Big O notation. This could be the number of elements in an array, the number of nodes in a graph, or the magnitude of a number.

- Pinpoint dominant operations: Look for loops, nested loops, and recursive calls. These are often where the bulk of the execution time is spent. Analyze the number of times these operations are executed in relation to the input size.

- Express the number of operations as a function of n: For instance, if a loop iterates through all ‘n’ elements of an array, the number of operations is proportional to ‘n’. If there’s a nested loop, the number of operations might be proportional to ‘n^2’.

- Simplify to Big O notation: Once you have a function representing the number of operations, drop the constant factors and lower-order terms. This simplification is the essence of Big O notation, as it focuses on the asymptotic behavior of the algorithm.

The importance of considering different input sizes cannot be overstated. An algorithm that performs well for small inputs might become impractical for larger datasets. Big O notation helps us understand how an algorithm scales with increasing input size. For instance, an O(n^2) algorithm will become significantly slower than an O(n log n) algorithm as ‘n’ grows larger.

Let’s consider a simple example:

“`

for (int i = 0; i < n; i++) {

System.out.println(i);

}

```

In this case, the dominant operation is `System.out.println(i)`, which is executed 'n' times. Therefore, the time complexity of this code snippet is O(n).

Now, consider a nested loop:

```

for (int i = 0; i < n; i++) {

for (int j = 0; j < n; j++) {

System.out.println(i + j);

}

}

```

Here, the `System.out.println(i + j)` operation is executed n * n = n^2 times. Thus, the time complexity is O(n^2).

Big O notation simplifies the process of đánh giá hiệu suất (performance evaluation) by providing a standardized way to express the upper bound of an algorithm’s execution time. It allows us to compare the efficiency of different algorithms without getting bogged down in implementation details or hardware specifications.

It’s also important to remember that Big O notation describes the *worst-case* scenario. An algorithm might perform better on average, but Big O focuses on the upper limit of its execution time.

In the next chapter, “Optimizing for Efficiency,” we will explore strategies for improving the Big O performance of algorithms, including techniques for reducing time complexity and making informed trade-offs. We will delve into practical examples and code snippets to demonstrate how these optimization strategies can be applied in real-world scenarios.

Optimizing for Efficiency

Following our analysis of algorithm performance in the previous chapter, where we discussed practical techniques for analyzing the time complexity of algorithms and highlighted the importance of considering different input sizes and how Big O notation simplifies this process, it’s crucial to delve into strategies for optimizing algorithms to improve their Big O performance. This chapter explores practical examples and code snippets demonstrating how to reduce time complexity, along with a discussion of the trade-offs between different optimization techniques.

One of the most fundamental approaches to optimization is selecting the right data structure. For instance, searching for an element in an unsorted array has a time complexity of O(n), but using a hash table can reduce this to O(1) on average. Similarly, sorting algorithms offer varying performance characteristics. While bubble sort has a time complexity of O(n^2), algorithms like merge sort and quicksort boast O(n log n) in average cases.

Consider the following Python example demonstrating the difference between searching in a list versus a set:

“`python

import time

def search_list(lst, target):

for item in lst:

if item == target:

return True

return False

def search_set(st, target):

return target in st

# Example usage:

list_data = list(range(100000))

set_data = set(range(100000))

target_value = 99999

start_time = time.time()

search_list(list_data, target_value)

list_time = time.time() – start_time

start_time = time.time()

search_set(set_data, target_value)

set_time = time.time() – start_time

print(f”List search time: {list_time:.6f} seconds”)

print(f”Set search time: {set_time:.6f} seconds”)

“`

This code clearly shows that searching in a set (implemented as a hash table) is significantly faster than searching in a list, demonstrating the impact of data structure choice on performance.

Another crucial technique is algorithm design. Dynamic programming, for example, can often transform exponential time complexity problems into polynomial time problems by storing and reusing intermediate results. Divide and conquer strategies can also break down a problem into smaller, more manageable subproblems, leading to improved efficiency.

Loop optimization is another critical area. Minimizing the number of iterations and reducing the work done within each iteration can significantly impact performance. Loop unrolling and strength reduction are examples of techniques used here. Furthermore, avoiding redundant calculations within loops is essential.

Understanding the impact of different input sizes is fundamental when optimizing. An algorithm that performs well for small inputs might become unacceptably slow for larger datasets. This is where Big O notation truly shines, allowing us to predict how an algorithm’s runtime will scale with increasing input size. The process of Thuật toán phân tích thời gian helps in identifying these scaling bottlenecks.

However, optimization often involves trade-offs. For example, reducing time complexity might increase space complexity, or vice versa. Choosing the right optimization strategy depends on the specific constraints of the problem and the available resources. Sometimes, a slight increase in development time to implement a more efficient algorithm is worth the long-term performance gains. A comprehensive Đánh giá hiệu suất should consider these trade-offs.

Furthermore, the constant factors hidden within Big O notation can sometimes be significant. While an algorithm with O(n) complexity will eventually outperform an algorithm with O(n log n) complexity as *n* grows large enough, for smaller values of *n*, the algorithm with the larger complexity might actually be faster due to smaller constant factors. Profiling and benchmarking are essential tools for measuring actual performance and identifying potential bottlenecks in real-world scenarios.

In conclusion, optimizing for efficiency is a multi-faceted process involving careful algorithm design, data structure selection, loop optimization, and a thorough understanding of the trade-offs involved. By applying these strategies and continuously profiling and benchmarking our code, we can significantly improve the performance of our algorithms and build more efficient and scalable applications.

Conclusions

Mastering Big O notation empowers developers to make informed decisions about algorithm selection and design. By understanding time complexity, developers can write efficient, scalable code that performs optimally, regardless of input size. This knowledge is crucial for building robust and performant applications.