Matrix algorithms are fundamental to various fields, from computer graphics to scientific simulations. This guide explores the core concepts of matrix algorithms, emphasizing their speed and efficiency in solving complex problems. Learn how to leverage these powerful tools for faster computation and advanced programming.

Understanding Matrix Algorithms

At the heart of numerous computational tasks lies the power of matrix algorithms. These algorithms are fundamental tools in fields ranging from computer graphics to data analysis, enabling efficient manipulation and analysis of large datasets. This chapter delves into the core concepts of these algorithms, exploring their applications and highlighting their significance in modern computing and lập trình.

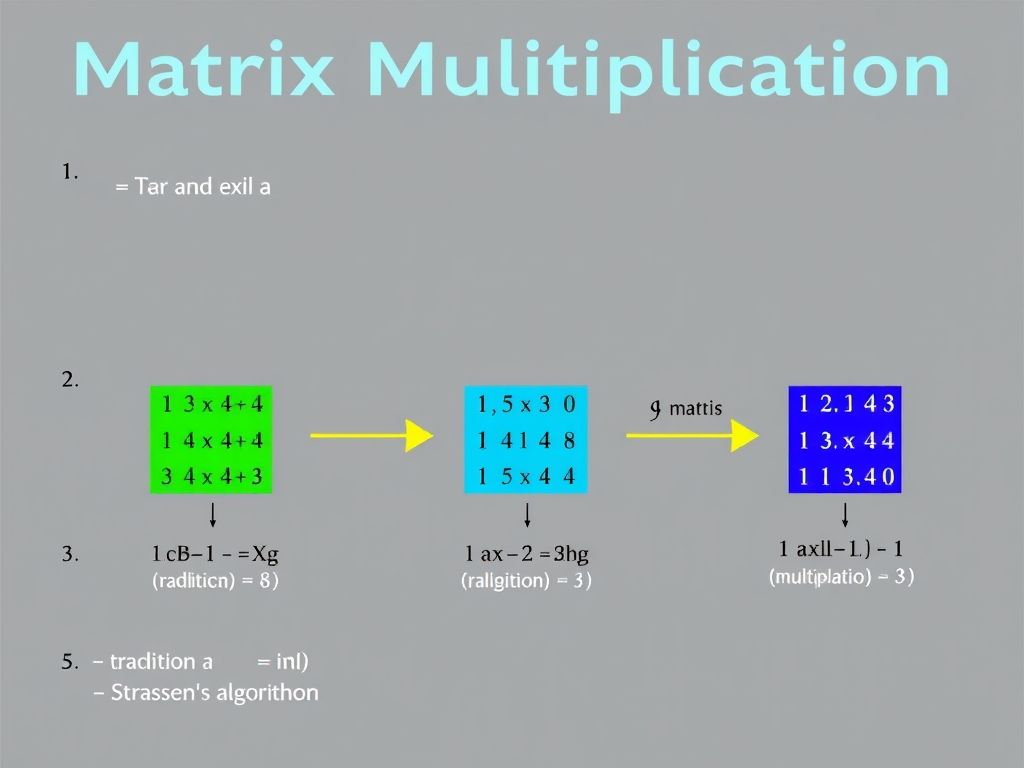

One of the most fundamental operations in matrix algebra is matrix multiplication. Given two matrices, A and B, their product C is computed by taking the dot product of the rows of A with the columns of B. The resulting matrix C has dimensions determined by the number of rows in A and the number of columns in B. While the standard algorithm for matrix multiplication has a time complexity of O(n^3), where n is the size of the matrix, more advanced algorithms like Strassen’s algorithm offer improved performance, especially for large matrices. Understanding the nuances of matrix multiplication is crucial for optimizing performance in various applications.

Another key concept is matrix decomposition. This involves breaking down a matrix into simpler constituent matrices, which can then be used to solve various problems more efficiently. Two common types of decomposition are LU decomposition and QR decomposition.

LU decomposition factorizes a matrix A into the product of a lower triangular matrix L and an upper triangular matrix U. This decomposition is particularly useful for solving systems of linear equations. Once A is decomposed into L and U, solving Ax = b becomes equivalent to solving Ly = b and Ux = y, which are much easier to solve since L and U are triangular matrices. This is a powerful technique in tính toán nhanh.

QR decomposition, on the other hand, factorizes a matrix A into the product of an orthogonal matrix Q and an upper triangular matrix R. QR decomposition is frequently used in solving least squares problems and eigenvalue problems. The orthogonal nature of Q ensures numerical stability, making QR decomposition a preferred choice in many applications.

Solving systems of linear equations is a pervasive problem in many scientific and engineering disciplines. Matrix algorithms provide efficient methods for finding solutions to these systems. As mentioned earlier, LU decomposition can be used to solve systems of linear equations. Other methods include Gaussian elimination and iterative methods like Jacobi and Gauss-Seidel. The choice of method depends on the specific characteristics of the system, such as its size, sparsity, and condition number.

Real-world examples of the application of these algorithms are abundant. In computer graphics, matrix transformations are used extensively for rotating, scaling, and translating objects in 3D space. These transformations are represented as matrices, and the application of a transformation involves multiplying the object’s vertices by the corresponding transformation matrix.

In data analysis, matrix algorithms are used for dimensionality reduction, clustering, and classification. For example, Principal Component Analysis (PCA) uses eigenvalue decomposition to identify the principal components of a dataset, allowing for dimensionality reduction while preserving the most important information.

Furthermore, in fields like finance, matrix algorithms are used for portfolio optimization and risk management. Covariance matrices are used to model the relationships between different assets, and matrix algorithms are used to find optimal portfolio allocations that minimize risk and maximize returns.

Even in machine learning, thuật toán ma trận play a critical role. Neural networks, for example, heavily rely on matrix operations for forward propagation and backpropagation. The weights and biases of the network are stored in matrices, and the computations involved in training the network involve numerous matrix multiplications and additions.

The efficiency of these algorithms is paramount, especially when dealing with large datasets. Optimization techniques, such as exploiting sparsity and using parallel computing, are often employed to further improve performance. The choice of algorithm and its implementation can have a significant impact on the overall performance of an application.

Accelerating Computations with Fast Matrix Algorithms

Chapter Title: Accelerating Computations with Fast Matrix Algorithms

Following our exploration of fundamental matrix algorithms in the previous chapter, “Understanding Matrix Algorithms,” where we discussed matrix multiplication, decomposition techniques like LU and QR, and solving systems of linear equations, this chapter delves into the crucial role of fast matrix algorithms in modern computing. The increasing demand for high-performance computing necessitates optimized solutions, and matrix operations are often the bottleneck in many scientific and engineering applications.

The importance of fast matrix algorithms stems from their ability to reduce the computational complexity of matrix operations. Traditional matrix multiplication, for instance, has a time complexity of O(n^3), where n is the dimension of the matrices. For large matrices, this cubic complexity can become prohibitively expensive. This is where algorithms like Strassen’s algorithm come into play.

Strassen’s algorithm, a divide-and-conquer approach, reduces the complexity of matrix multiplication to approximately O(n^2.81). While the constant factors involved might make it less efficient for small matrices, its asymptotic advantage becomes significant for larger matrices. *This reduction in complexity directly translates to faster computation times and reduced resource consumption.*

Let’s illustrate Strassen’s algorithm with pseudocode:

“`

function Strassen(A, B)

// A and B are n x n matrices, where n is a power of 2

if n <= threshold then return standardMatrixMultiply(A, B) // Use standard algorithm for small matrices // Partition A and B into four (n/2) x (n/2) submatrices A11, A12, A21, A22 = partition(A) B11, B12, B21, B22 = partition(B) // Compute intermediate matrices M1 = Strassen(A11 + A22, B11 + B22) M2 = Strassen(A21 + A22, B11) M3 = Strassen(A11, B12 - B22) M4 = Strassen(A22, B21 - B11) M5 = Strassen(A11 + A12, B22) M6 = Strassen(A21 - A11, B11 + B12) M7 = Strassen(A12 - A22, B21 + B22) // Compute result submatrices C11 = M1 + M4 - M5 + M7 C12 = M3 + M5 C21 = M2 + M4 C22 = M1 - M2 + M3 + M6 // Combine result submatrices C = combine(C11, C12, C21, C22) return C end function ``` Another powerful technique for accelerating matrix computations is parallel computing. Modern computers often have multiple cores or access to distributed computing resources. By distributing the workload of matrix operations across multiple processors, we can achieve significant speedups. For example, matrix multiplication can be parallelized by assigning different blocks of the matrices to different processors.

Here’s a pseudocode example illustrating parallel matrix multiplication:

“`

function parallelMatrixMultiply(A, B, num_processes)

// A and B are n x n matrices

// Divide matrices into blocks and distribute to processes

block_size = n / num_processes

for i = 0 to num_processes – 1 do in parallel

// Each process computes a block of the result matrix

row_start = i * block_size

row_end = (i + 1) * block_size

for j = 0 to n – 1 do

for k = 0 to n – 1 do

for row = row_start to row_end -1 do

C[row][j] += A[row][k] * B[k][j]

end for

end for

end for

end for

return C

end function

“`

This pseudocode demonstrates a simple block-based parallelization. More sophisticated parallel algorithms can further optimize performance by considering factors like data locality and communication overhead. The use of libraries like MPI (Message Passing Interface) facilitates the implementation of parallel matrix algorithms in languages like C++. In lập trình (programming), leveraging these parallel computing techniques is crucial for achieving optimal performance with large datasets.

The field of tính toán nhanh (fast computing) is constantly evolving, with researchers developing new and improved matrix algorithms. Techniques like randomized algorithms and approximation algorithms offer further possibilities for accelerating computations, especially in scenarios where exact solutions are not strictly required.

In conclusion, fast matrix algorithms and parallel computing are essential tools for accelerating computations in various domains. Strassen’s algorithm provides a theoretical improvement in complexity, while parallelization allows us to leverage the power of multi-core processors and distributed systems. Understanding and applying these techniques is crucial for anyone involved in high-performance computing and lập trình (programming).

The next chapter, “Implementing Matrix Algorithms in Programming,” will detail how to implement these algorithms in practice, focusing on programming languages like Python and C++, and exploring the available libraries and tools for efficient matrix operations.

Chapter Title: Implementing Matrix Algorithms in Programming

Building upon the discussion in “Accelerating Computations with Fast Matrix Algorithms,” where we explored the theoretical advantages of algorithms like Strassen’s and the power of parallel computing for matrix operations, this chapter delves into the practical aspects of implementing these **thuật toán ma trận** in programming languages. The previous chapter highlighted the importance of **tính toán nhanh**, and here, we’ll explore how to achieve it through efficient coding practices and leveraging appropriate libraries. We’ll focus primarily on Python and C++, two languages widely used in scientific computing and **lập trình**.

Python offers a high level of abstraction and a rich ecosystem of libraries specifically designed for numerical computation. NumPy is the cornerstone, providing efficient array objects and a vast collection of functions for performing mathematical operations on these arrays. SciPy builds upon NumPy, offering more advanced algorithms, including those for linear algebra, optimization, and signal processing. For instance, implementing matrix multiplication in NumPy is incredibly straightforward:

“`python

import numpy as np

# Create two matrices

A = np.array([[1, 2], [3, 4]])

B = np.array([[5, 6], [7, 8]])

# Perform matrix multiplication

C = np.matmul(A, B)

print(C)

“`

This concise code leverages NumPy’s optimized `matmul` function, which is significantly faster than implementing matrix multiplication from scratch using nested loops. Furthermore, NumPy utilizes optimized BLAS (Basic Linear Algebra Subprograms) and LAPACK (Linear Algebra PACKage) libraries under the hood, further enhancing performance. These libraries are often written in Fortran or C/C++ and are highly optimized for specific hardware architectures.

For even greater performance, especially when dealing with very large matrices, libraries like CuPy can be used. CuPy provides a NumPy-compatible interface that allows you to execute matrix operations on NVIDIA GPUs, enabling massive parallelization and significant speedups.

In C++, achieving efficient matrix algorithm implementations requires a bit more effort but offers fine-grained control over memory management and optimization. Libraries like Eigen and Armadillo are popular choices. Eigen is a header-only library that provides high-performance linear algebra operations, while Armadillo offers a more MATLAB-like syntax.

Here’s an example of matrix multiplication using Eigen:

“`cpp

#include

#include

using namespace Eigen;

int main() {

Matrix2d A;

A << 1, 2,

3, 4;

Matrix2d B;

B << 5, 6,

7, 8;

Matrix2d C = A * B;

std::cout << C << std::endl;

return 0;

}

```

Similar to NumPy, Eigen utilizes optimized BLAS and LAPACK implementations when available. However, in C++, you have more control over compiler optimizations and can fine-tune the code for specific hardware. For example, you can use compiler flags to enable vectorization and loop unrolling, further improving performance.

Implementing more complex **thuật toán ma trận**, such as Strassen's algorithm, requires careful attention to memory management and recursion. While the theoretical complexity of Strassen's algorithm is lower than the standard matrix multiplication algorithm (O(n^log2(7)) vs. O(n^3)), the overhead associated with recursive calls and temporary memory allocation can make it less efficient for small matrix sizes. Therefore, it's crucial to benchmark different algorithms and choose the most appropriate one based on the specific problem size and hardware.

Furthermore, parallel programming techniques, such as using OpenMP or CUDA, can be employed to further accelerate matrix algorithms. OpenMP allows you to easily parallelize loops and other code sections, while CUDA provides a more fine-grained control over GPU resources. These techniques are particularly effective for large matrices where the computational workload can be distributed across multiple cores or GPUs.

Choosing the right library and optimization techniques depends heavily on the specific application and the available resources. Python offers a rapid prototyping environment and ease of use, while C++ provides greater control and potential for higher performance. Understanding the strengths and weaknesses of each language and the available libraries is essential for effectively implementing matrix algorithms and achieving **tính toán nhanh** in your **lập trình** projects.

Conclusions

Mastering matrix algorithms empowers you to tackle complex computational tasks efficiently. This guide provides a comprehensive understanding, from fundamental concepts to practical implementation, allowing you to optimize your programming and calculations.