In today’s demanding software landscape, efficient memory management is crucial for performance. This article provides practical tips and strategies to optimize memory usage in your applications, leading to faster execution and reduced resource consumption. Learning these techniques will significantly improve your software’s overall efficiency.

Understanding Memory Management

Memory management is the cornerstone of efficient software development. Without a solid understanding of how memory is allocated, used, and released, applications can suffer from performance bottlenecks, instability, and even crashes. This chapter delves into the fundamental concepts of memory management, providing a foundation for the more advanced optimization techniques we’ll explore later.

At its core, memory management involves the allocation of computer memory to programs and processes. This allocation is a dynamic process, meaning memory is assigned as needed during the execution of a program. There are two primary operations involved: allocation and deallocation. Allocation is the process of reserving a portion of memory for a specific purpose, such as storing data or executing code. Deallocation, on the other hand, is the process of releasing previously allocated memory back to the system, making it available for other uses.

Garbage collection is another crucial aspect of memory management, particularly in languages like Java and C#. *Garbage collection is an automatic memory management technique that identifies and reclaims memory that is no longer in use by a program.* It relieves developers from the burden of manually deallocating memory, reducing the risk of memory leaks. However, garbage collection can also introduce performance overhead, as the garbage collector periodically pauses program execution to perform its cleanup tasks. Understanding how garbage collection works in your chosen programming language is vital for optimizing memory usage.

Different programming languages employ different memory management strategies. Some languages, like C and C++, rely on manual memory management, where developers are responsible for explicitly allocating and deallocating memory using functions like `malloc` and `free`. This approach offers fine-grained control over memory usage but also introduces the risk of memory leaks and dangling pointers if memory is not managed carefully.

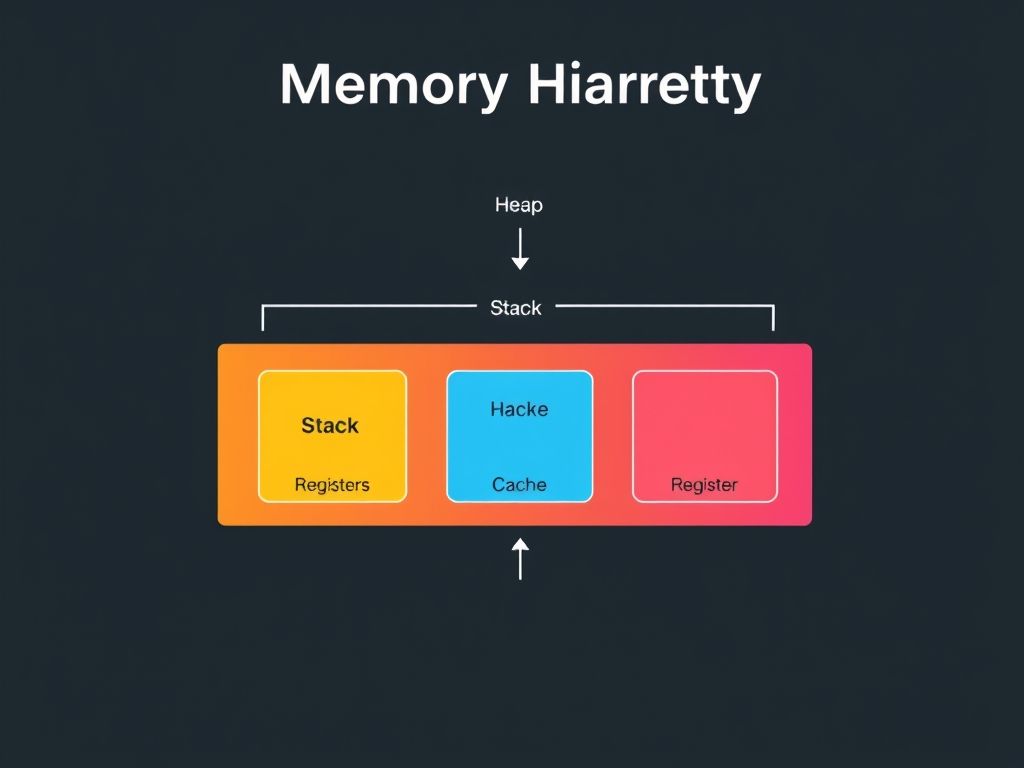

Memory is typically divided into two main types: the heap and the stack. The stack is a region of memory used for storing local variables, function call information, and return addresses. It operates on a Last-In, First-Out (LIFO) principle, meaning that the last item added to the stack is the first item removed. Stack memory is automatically managed by the compiler, making it fast and efficient for storing temporary data. The stack has a limited size, so it’s important to avoid allocating large amounts of data on the stack, as this can lead to stack overflow errors.

The heap, on the other hand, is a region of memory used for dynamic memory allocation. It’s a more flexible but also more complex memory area. Memory is allocated on the heap using functions like `malloc` (in C/C++) or the `new` operator (in C++ and Java). The heap is used for storing objects and data structures that have a longer lifespan than local variables. Unlike the stack, memory allocated on the heap must be explicitly deallocated to prevent memory leaks. This is where understanding *Quản lý tài nguyên* becomes crucial.

Memory leaks are a common problem in software development, especially in languages with manual memory management. A memory leak occurs when memory is allocated but never deallocated, leading to a gradual depletion of available memory. Over time, this can cause the application to slow down, become unstable, and eventually crash. To avoid memory leaks, it’s essential to ensure that every memory allocation is paired with a corresponding deallocation. Tools like memory profilers can help identify memory leaks in your code. Debugging memory leaks is a vital *Tip lập trình*.

Here are some common causes of memory leaks and how to avoid them:

- Forgetting to deallocate memory: Always ensure that you deallocate memory when it’s no longer needed. Use `free` in C/C++ or the `delete` operator in C++.

- Losing pointers to allocated memory: If you lose the pointer to an allocated block of memory, you can no longer deallocate it. Be careful when passing pointers around and ensure that you always have a valid pointer to the allocated memory.

- Circular references: In garbage-collected languages, circular references can prevent objects from being garbage collected, leading to a memory leak. Break circular references when they are no longer needed.

Effective *Tối ưu hóa bộ nhớ* starts with a deep understanding of these fundamental concepts. By understanding how memory is allocated, deallocated, and garbage collected, developers can write more efficient and reliable code.

Building on this foundation, the next chapter will detail strategies for minimizing memory usage during program execution.

Chapter: Optimizing Memory Allocation

Building upon our understanding of memory management from the previous chapter, “Understanding Memory Management,” where we discussed fundamental concepts like memory allocation, deallocation, garbage collection, and different memory types such as heap and stack, we now delve into strategies for *optimizing memory allocation* to minimize memory usage during program execution. This chapter focuses on practical techniques that programmers can employ to write more efficient and resource-friendly code.

One of the most effective ways to optimize memory allocation is by carefully choosing the right data structures for the job. For example, consider the trade-offs between using arrays and linked lists. While linked lists offer flexibility in terms of dynamic resizing, they incur a memory overhead due to the storage of pointers for each node. In scenarios where the size of the data is known in advance or doesn’t change frequently, using arrays can be significantly more memory-efficient. Arrays store elements in contiguous memory locations, eliminating the need for extra pointer storage. This is a crucial aspect of **tối ưu hóa bộ nhớ**.

Consider the following scenario: You need to store a fixed number of student IDs. Using an array would be a more efficient choice compared to a linked list.

“`c++

// Array example (C++)

int studentIDs[100]; // Allocates space for 100 integers

// Linked list example (C++) – Requires more memory per element

struct Node {

int studentID;

Node* next;

};

“`

Another powerful technique is pre-allocating memory whenever possible. Dynamic memory allocation, while flexible, can be expensive in terms of performance. Each allocation request involves overhead for the memory manager to find a suitable block of memory. By pre-allocating memory, we can reduce the frequency of these requests and improve performance. This is a valuable **tip lập trình**.

For instance, if you know that your program will need to store a maximum of 1000 items, allocate a buffer of that size upfront instead of allocating memory incrementally as items are added.

Furthermore, avoiding unnecessary object creation is paramount. Object creation often involves allocating memory on the heap, which can be a costly operation. If an object is only needed temporarily, consider reusing existing objects or using lightweight data structures instead. String manipulation, in particular, can be a source of unnecessary object creation. Instead of creating new string objects for every modification, use mutable string buffers or string builders.

Consider this example in Java:

“`java

// Inefficient: Creates multiple String objects

String result = “”;

for (int i = 0; i < 1000; i++) {

result += i; // Creates a new String object in each iteration

}

// Efficient: Uses StringBuilder to modify the string in place

StringBuilder sb = new StringBuilder();

for (int i = 0; i < 1000; i++) {

sb.append(i); // Modifies the StringBuilder object directly

}

String result = sb.toString();

```

The efficient approach using `StringBuilder` dramatically reduces memory allocation overhead.

Effective **quản lý tài nguyên** also plays a vital role. Releasing memory that is no longer needed is crucial to prevent memory leaks and ensure that the program remains stable. In languages with manual memory management (like C and C++), it is the programmer's responsibility to explicitly deallocate memory using `free` or `delete`. In languages with garbage collection (like Java and C#), the garbage collector automatically reclaims memory that is no longer reachable. However, even in garbage-collected languages, it is still important to avoid holding on to objects longer than necessary, as this can delay garbage collection and increase memory consumption.

In conclusion, optimizing memory allocation involves a combination of careful data structure selection, pre-allocation strategies, and minimizing unnecessary object creation. By applying these techniques, developers can significantly reduce the memory footprint of their programs and improve overall performance. These are essential considerations for efficient **quản lý tài nguyên** and effective **tối ưu hóa bộ nhớ**. These practices are fundamental **tip lập trình** for any developer aiming to improve application performance. This understanding of memory allocation will naturally lead us to the next chapter, where we will explore "Resource Management Strategies" in greater detail.

Following our discussion on optimizing memory allocation in the previous chapter, where we detailed strategies for minimizing memory usage during program execution, including efficient data structure usage like arrays over linked lists and pre-allocation techniques to avoid unnecessary object creation, we now turn our attention to another critical aspect of memory optimization: resource management.

Resource Management Strategies

Effective quản lý tài nguyên is paramount for maintaining application stability and performance. Poor resource management can lead to memory leaks, performance bottlenecks, and ultimately, application crashes. This chapter explores techniques for managing and releasing resources effectively, focusing on best practices for closing files and connections promptly, and leveraging memory pools and caching mechanisms.

One of the most basic, yet often overlooked, aspects of resource management is the proper handling of files and network connections. Failing to close these resources after use can lead to resource exhaustion, preventing other parts of the application, or even other applications on the system, from accessing them.

Consider the following C++ example:

“`cpp

#include

#include

void readFile(const std::string& filename) {

std::ifstream file(filename);

if (file.is_open()) {

std::string line;

while (std::getline(file, line)) {

std::cout << line << std::endl;

}

file.close(); // Explicitly close the file

} else {

std::cerr << "Unable to open file: " << filename << std::endl;

}

}

```

In this example, the `file.close()` statement explicitly releases the file resource. Modern C++ offers a safer alternative using RAII (Resource Acquisition Is Initialization) through smart pointers or simply letting the `ifstream` object go out of scope, which automatically closes the file:

```cpp

#include

#include

void readFile(const std::string& filename) {

std::ifstream file(filename);

if (file.is_open()) {

std::string line;

while (std::getline(file, line)) {

std::cout << line << std::endl;

}

// File is automatically closed when 'file' goes out of scope

} else {

std::cerr << "Unable to open file: " << filename << std::endl;

}

}

```

This approach is generally preferred as it guarantees resource release even in the presence of exceptions. The same principle applies to network connections, database connections, and other types of resources. Always ensure that resources are released in a timely manner, ideally using RAII or similar techniques.

Beyond basic resource handling, more advanced techniques such as memory pools and caching can significantly improve performance and reduce memory overhead. Memory pools involve pre-allocating a large chunk of memory and then allocating smaller blocks from this pool as needed. This avoids the overhead of repeatedly allocating and deallocating memory from the system's heap.

*Memory pools are particularly useful when dealing with frequent allocations and deallocations of objects of the same size.* They can dramatically reduce fragmentation and improve allocation speed.

Caching, on the other hand, involves storing frequently accessed data in a readily available location, such as memory, to avoid repeatedly fetching it from slower storage like disk or network. Caching can significantly improve application responsiveness, but it also requires careful management to ensure data consistency and avoid excessive memory usage.

Effective tối ưu hóa bộ nhớ often involves a combination of these techniques. For instance, a game engine might use memory pools for allocating game objects and caching for storing frequently used textures and models.

Here are some tip lập trình to keep in mind:

- Always close files and connections promptly.

- Use RAII to ensure resource release even in the presence of exceptions.

- Consider using memory pools for frequent allocations and deallocations.

- Implement caching strategically to improve performance.

- Monitor resource usage to identify potential leaks or bottlenecks.

By adopting these resource management strategies, developers can create more robust, efficient, and scalable applications. The next chapter will delve into techniques for profiling and diagnosing memory-related issues.

Conclusions

By implementing the memory optimization techniques discussed, you can significantly enhance the performance and stability of your applications. Remember to prioritize proactive memory management to prevent performance bottlenecks and resource exhaustion.